Companies want to train and use large language models (LLMs) with their own proprietary data. Open source generative models such as Meta’s Llama 2 are pivotal in making that possible. The next hurdle is finding a platform to harness the power of LLMs. Snowflake lets you apply near-magical generative AI transformations to your data all in Python, with the protection of its out-of-the-box governance and security features.

Let’s say you have a table with hundreds of thousands of customer support ticket writeups. You may want to extract keywords, automatically categorize each conversation, possibly assign a sentiment value, and want to use an LLM to make it happen. Starting from your data in Snowflake, you can quickly spin up a powerful open source LLM (in this case, Llama2) within Snowflake, securely access your data, and accomplish this workflow in minutes. Let’s see how.

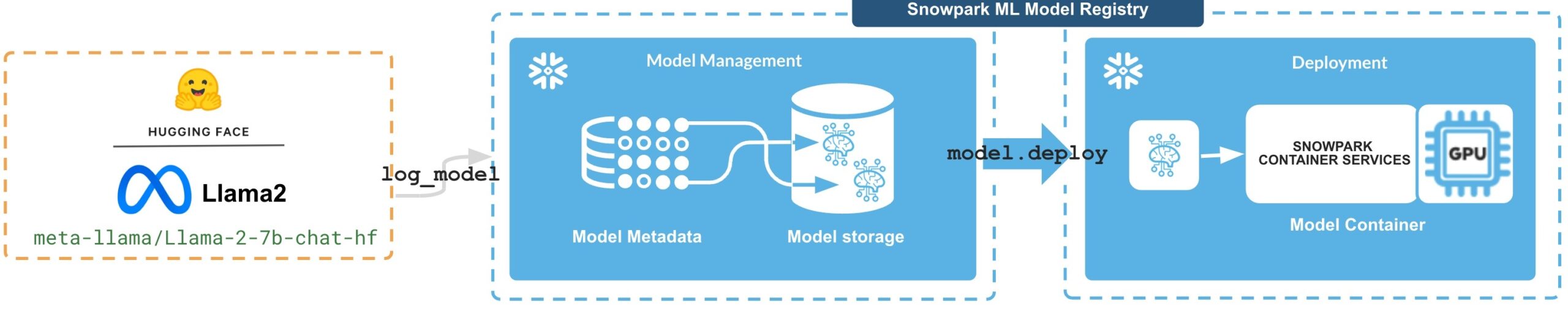

For the context of this walkthrough, we’ll use publicly accessible data so people can try it for themselves. We will be using three basic building blocks: Snowpark ML, the Snowpark Model Registry and Snowpark Container Services. This walkthrough will use the following foundational Snowflake technologies:

- Snowpark ML is our Python software development kit that allows you to create and manage end-to-end ML and AI workflows inside Snowflake without having to extract or move data. It’s backed by Snowflake’s trademark governance, scalability and ease of use features throughout the workflow. Snowpark ML unifies data pre-processing, feature engineering, model training, and model management and integrated deployment into a single, easy-to-use Python toolkit. You can read more about it in the intro here, and in the documentation.

- At Snowflake Summit this year, we announced the Snowpark Model Registry (in private preview) which supports integrated, streamlined model deployment to Snowflake with a single API call. The model registry now also supports deployment to Snowpark Container Services including GPU compute pools.

- Finally, Snowpark Container Services is our recently announced managed runtime to deploy, run and scale containerized jobs, services and functions. Here’s a technical introduction.

NOTE: Please contact your Snowflake account team to join the private previews of Snowpark Container Services and the Snowpark Model Registry, and get access to documentation.

Pre-LLM setup

Setting up a Compute Pool

You’ll need to create a compute pool with GPUs. To run LLama2-7B, let’s specify GPU_3 as instance_family.

CREATE COMPUTE POOL MY_COMPUTE_POOL with instance_family=GPU_3 min_nodes=1 max_nodes=1;Install Snowpark ML

You can install Snowpark ML by following the instructions in our docs. For instructions on how to create a model registry, please use the preview registry documentation made available by your Snowflake account team.

Deploying an LLM from Hugging Face in 4 simple steps

Step 1: Obtain and prepare the model for deployment

Let’s use Llama2-7B from Hugging Face, via our convenient wrapper around the Hugging Face transformers API. NOTE – To use Llama2 with Hugging Face, you need a Hugging Face token. This is due to Meta’s specific Terms of Service related to Llama2 usage.

HF_AUTH_TOKEN = "..." #Your token from Hugging Face

from transformers import pipeline

from snowflake.ml.model.models import huggingface_pipeline

llama_model = huggingface_pipeline.HuggingFacePipelineModel(task="text-generation", model="meta-llama/Llama-2-7b-chat-hf", token=HF_AUTH_TOKEN, return_full_text=False, max_new_tokens=100) Step 2: Register the model

Next, we’ll use the Model Registry’s log_model API in the Snowpark ML to register the model, passing in a model name, a freeform version string and the model from above.

MODEL_NAME = "LLAMA2_MODEL_7b_CHAT"

MODEL_VERSION = "1"

llama_model=registry.log_model(

model_name=MODEL_NAME,

model_version=MODEL_VERSION,

model=llama_model

)Step 3: Deploy the model to Snowpark Container Services

Let’s now deploy the model to our compute pool.

from snowflake.ml.model import deploy_platforms

llama_model.deploy(

deployment_name="llama_predict",

platform=deploy_platforms.TargetPlatform.SNOWPARK_CONTAINER_SERVICES,

options={

"compute_pool": "MY_COMPUTE_POOL",

"num_gpus": 1

},

)Once your deployment spins up, the model will be available as a Snowpark Container Services endpoint.

You can see how Snowpark ML streamlines LLM deployment by taking care of creating the corresponding Snowpark Container Services SERVICE definition, packaging the model in a Docker image together with its runtime dependencies, and starting up the service with that image in the specified compute pool.

Step 4: Test-driving the deployment

For our example, we will use the model to run text categorization for a news items dataset from Kaggle, which we first store in a Snowflake table. Here’s a sample entry from this data set:

{

"category": "ARTS",

"headline": "Start-Art",

"authors": "Spencer Wolff, ContributorJournalist",

"link": "https://www.huffingtonpost.com/entry/startart_b_5692968.html",

"short_description": "For too long the contemporary art world has been the exclusive redoubt of insiders, tastemakers, and a privileged elite. Gertrude has exploded this paradigm, and fashioned a conversational forum that democratizes and demystifies contemporary art.",

"date": "2014-08-20"

}Download the data set, and load it into a Snowflake table:

import pandas as pd

news_dataset = pd.read_json("News_Category_Dataset_v3.json", lines=True).convert_dtypes()

NEWS_DATA_TABLE_NAME = "news_dataset"

news_dataset_sp_df = session.create_dataframe(news_dataset)

news_dataset_sp_df.write.mode("overwrite").save_as_table(NEWS_DATA_TABLE_NAME)Here’s a breakdown of the task list for our use case:

- Assign a category to each news item in the table.

- Assign an importance score of 1 (low) – 10 (high) to each item.

- Extract a list of keywords from each item.

- Return the above result as a formatted, valid JSON object that conforms to a specified schema in the prompt.

Here’s the detailed prompt to accomplish these tasks. Note that the prompt itself will vary depending on your use case.

prompt_prefix = """[INST] <>

Your output will be parsed by a computer program as a JSON object. Please respond ONLY with valid json that conforms to this JSON schema: {"properties": {"category": {"type": "string","description": "The category that the news should belong to."},"keywords": {"type": "array":"description": "The keywords that are mentioned in the news.","items": [{"type": "string"}]},"importance": {"type": "number","description": "A integer from 1 to 10 to show if the news is important. The higher the number, the more important the news is."}},"required": ["properties","keywords","importance"]}

As an example, input "Residents ordered to evacuate amid threat of growing wildfire in Washington state, medical facilities sheltering in place" results in the json: {"category": "Natural Disasters","keywords": ["evacuate", "wildfire", "Washington state", "medical facilities"],"importance": 8}

< >

"""

prompt_suffix = "[/INST]"Call the model, providing the modified data set with the ‘inputs’ column that contains the prompt.

res = llama_model_ref.predict(

deployment_name=DEPLOYMENT_NAME,

data=input_df

)This returns a Snowpark dataframe with an output column that contains the model’s response for each row. The raw response interleaves text and the expected JSON output, and looks like the output below.

[{"generated_text": " Here is the JSON output for the given input:\n{\n\"category\": \"Art\",\n\"keywords\": [\n\"Gertrude\",\n\"contemporary art\",\n\"democratization\",\n\"demystification\"\n],\n\"importance\": 9\n\n}\n\nNote that I have included the required fields \"category\", \"keywords\", and \"importance\" in the JSON output, and have also included the specified types for each"}]After post-processing to format this raw output for readability, here is what it looks like:

{

"category": "Art",

"keywords": [

"Gertrude",

"contemporary art",

"democratization",

"demystification"

],

"importance": 9

}And that’s it! You have used an LLM to perform a sophisticated extraction and classification task on text data in Snowflake. The process took only a few lines of Python using Snowpark ML, and used the full power of Meta’s 7-billion parameter Llama2 model running in Snowpark Container Services. For more on running open source LLMs with Snowpark Container services, follow our Medium channel and subscribe to the Inside the Data Cloud blog.