Blog

Build, Deploy and Serve Models at Scale with Snowflake ML

Read about the latest generally available announcements for building and deploying models at scale in Snowflake.

Snowflake Intelligence is here

Bring enterprise insights to every employee’s fingertips.

Overview

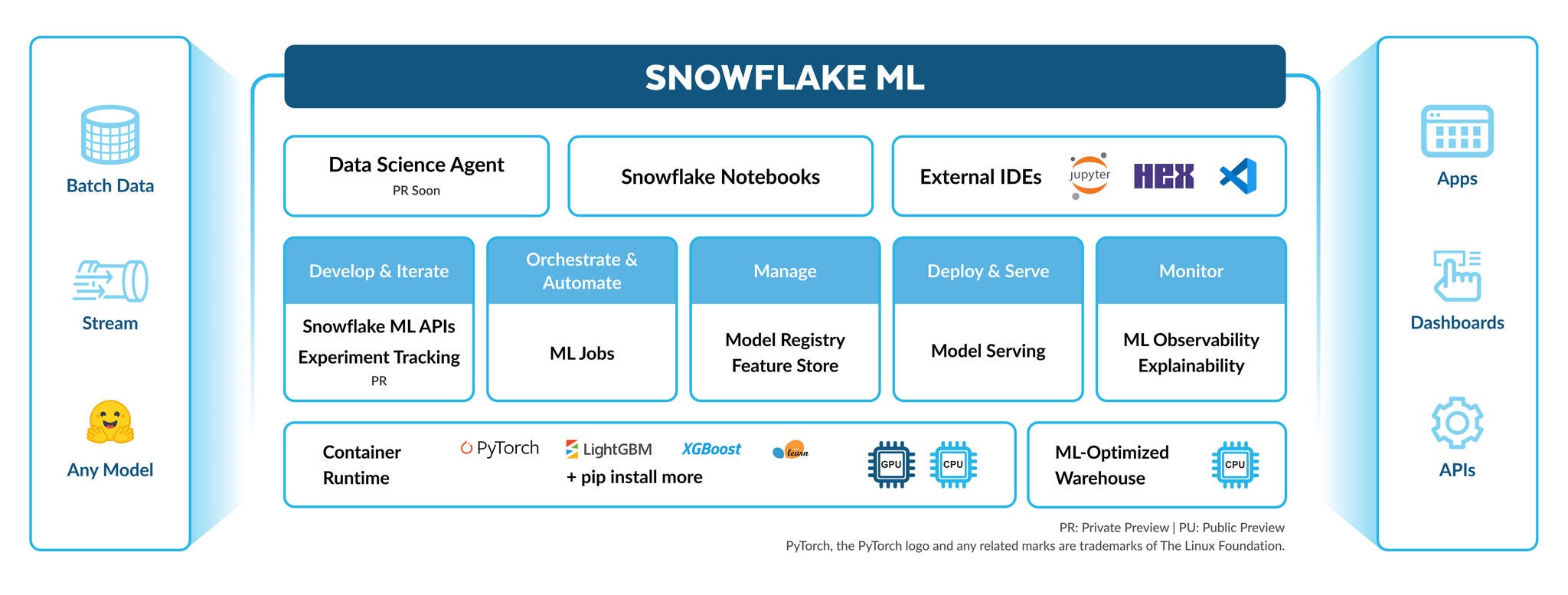

Develop, deploy and monitor ML features and models at scale with a fully integrated platform that brings together tools, workflows and compute infrastructure to the data.

Unify model pipelines end to end with any open source model on the same platform where your data lives.

Scale ML pipelines over CPUs or GPUs with built-in infrastructure optimizations — no manual tuning or configuration required.

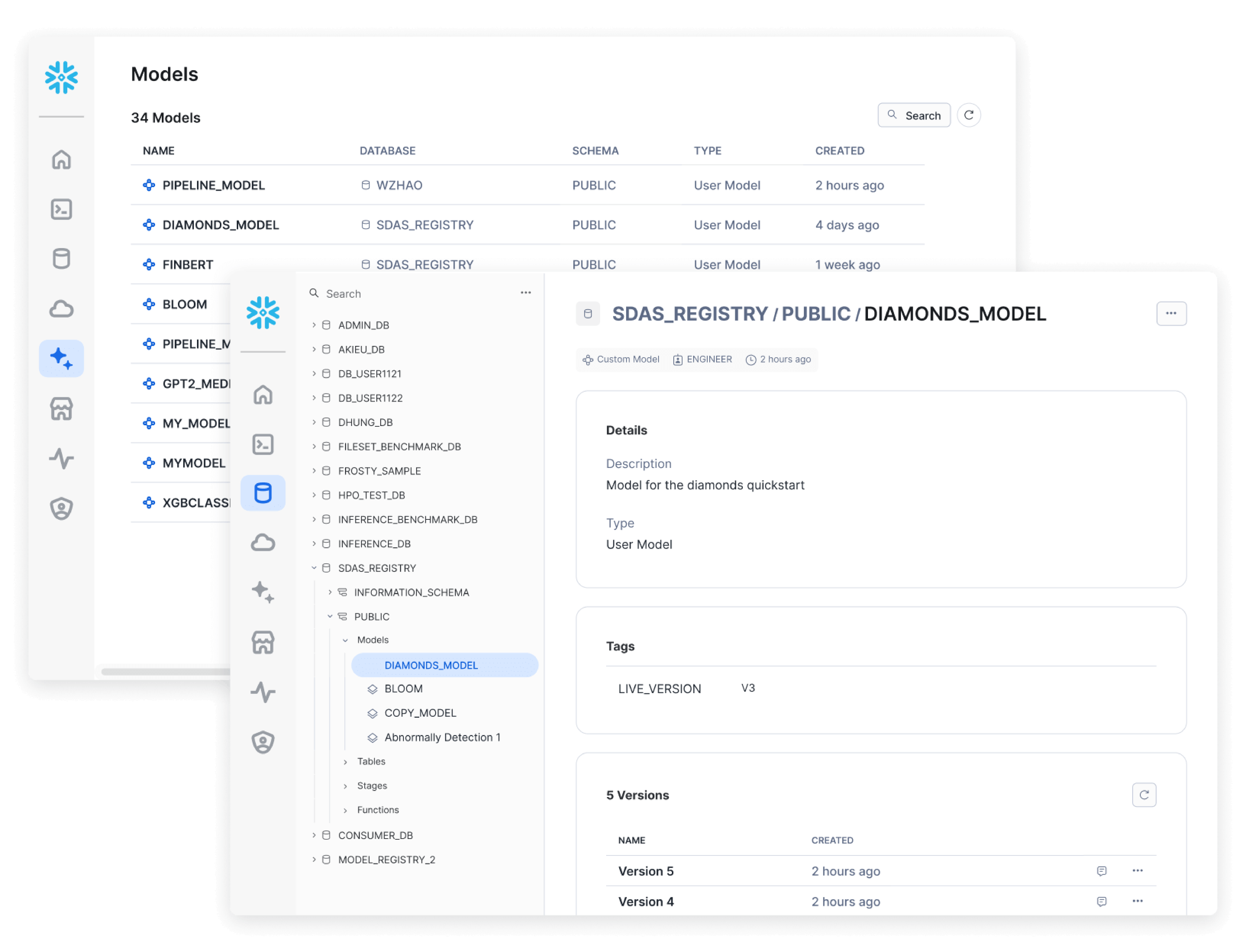

Discover, manage and govern features and models in Snowflake across the entire lifecycle.

ML Workflow

Model Development

Optimize data loading and distribute model training from Snowflake Notebooks on Container Runtime or any IDE of choice with ML Jobs.

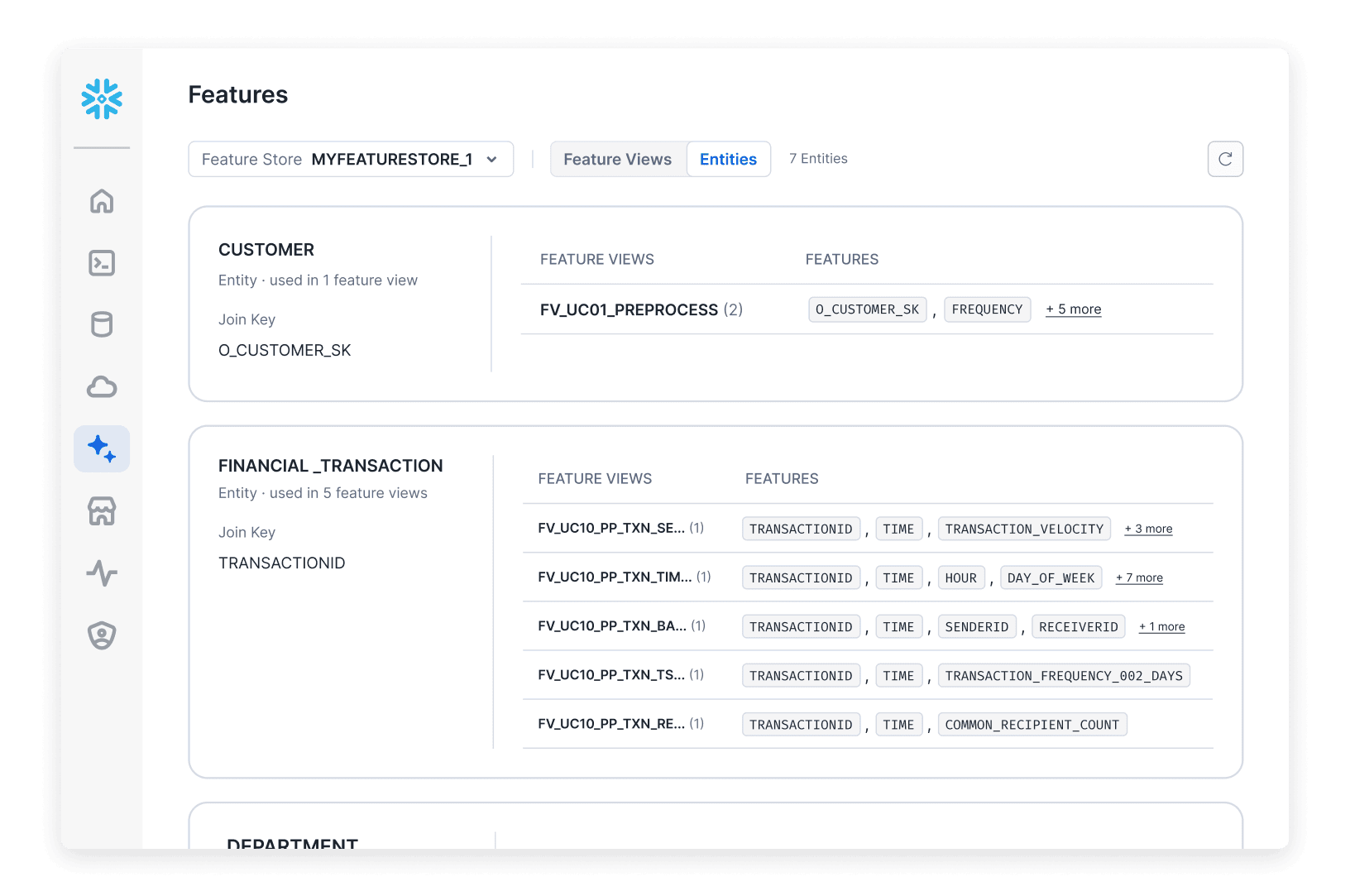

Feature Management

Create, manage and serve ML features with continuous, automated refresh on batch or streaming data using the Snowflake Feature Store. Promote discoverability, reuse and governance of features across training and inference.

Production

Log models built anywhere in Snowflake Model Registry and serve for real-time or batch predictions on Snowflake data with distributed GPUs or CPUs.

Learn more about the integrated features for development

and production in Snowflake ML

Subscribe to our monthly newsletter

Stay up to date on Snowflake’s latest products, expert insights and resources—right in your inbox!

* Private preview, † Public preview, ‡ Coming soon