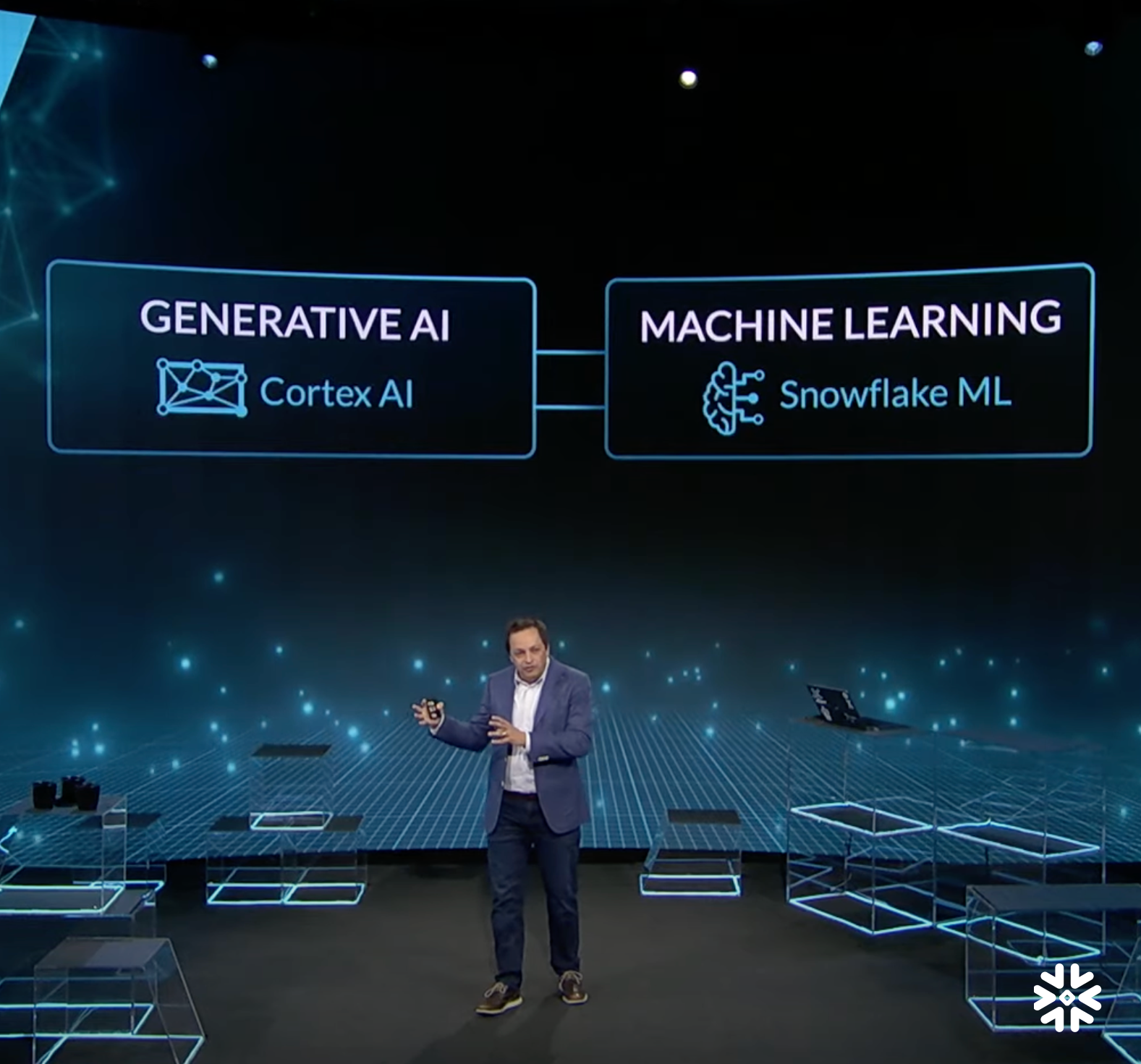

In the race to AI success, organisations require integrated data + AI capabilities that allow them to rapidly build and scale the next generation of AI-powered applications. Discover how Snowflake’s unified and interoperable platform accelerates time to value by reducing manual configurations, encouraging data sharing, and supporting end-to-end governance, privacy and security for trusted, adopted AI workflows.

Join us to get the latest generative AI and ML innovations, see the technology in action with demos, and hear real-life stories about how Snowflake helped drive productivity in their day-to-day use cases. In this session, Snowflake experts and key partners will share demos, best practices and how to:

- Accelerate unstructured data processing from ingestion to downstream analytics

- Build AI data agents to make all your data accessible to agents and applications

- Streamline end-to-end machine learning workflows and develop models faster and cheaper with container-based runtime

![]()