Designed for Excellence: Introducing the Snowflake Well-Architected Framework

Why a well-architected framework matters

As data platforms evolve into enterprise-critical ecosystems, organizations are under pressure to move faster, secure more and spend less — all without sacrificing trust or performance.

While the industry has long adopted “well-architected” frameworks for cloud infrastructure, data platforms have often lacked a comparable blueprint that unites security controls, reliability, performance, operations and financial efficiency under a single platform architecture.

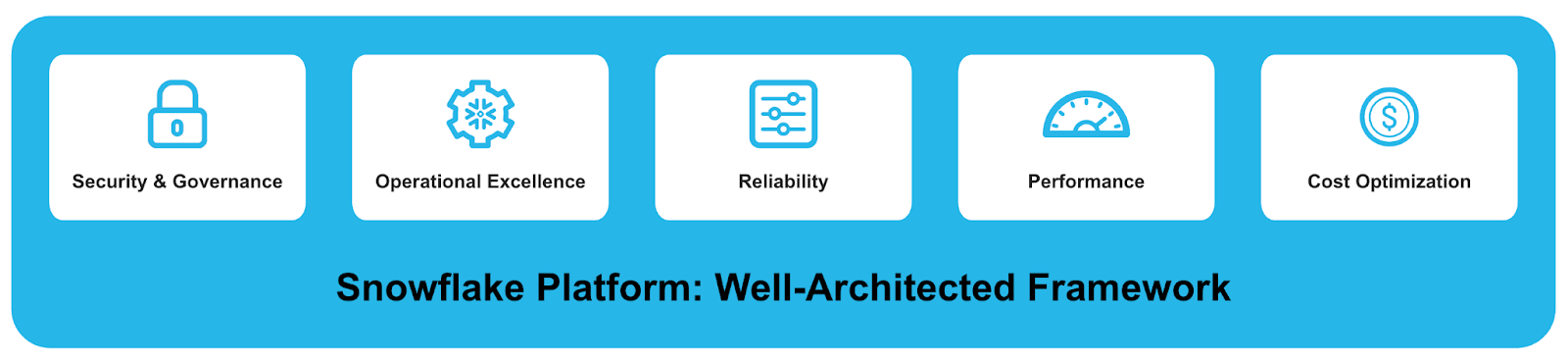

The Snowflake Well-Architected Framework (WAF) fills that gap. It refines years of customer experience, engineering best practices and platform patterns into five core pillars:

- Security and governance

- Operational excellence

- Reliability

- Performance optimization

- Cost optimization

Together, these pillars form a continuous improvement model for customers building on the Snowflake AI Data Cloud — a framework not just to deploy correctly but to evolve intelligently.

Built for the Snowflake platform

Unlike traditional frameworks that rely on third-party tooling or infrastructure-specific controls, Snowflake WAF is natively enabled through the platform itself. Every principle — whether securing PII, automating pipelines or managing consumption — is expressed through Snowflake features customers already use every day: Horizon Catalog, Dynamic Tables, Cortex AI governance, resource monitors and FinOps dashboards.

The Snowflake WAF is a design language for excellence in the Data Cloud — helping to transform best practices into built-in capabilities.

The five pillars of the Snowflake Well-Architected Framework

1. Security and governance: Protect confidently

Security and governance controls form the foundation of every Snowflake Well-Architected design. They help customers ensure data remains protected, private and compliant across every layer of the platform, from encryption to AI governance.

Core foundations

Encryption and key management

Safeguard data at rest and in transit using defense-in-depth encryption and customer-controlled keys.

End-to-end encryption with TLS in transit and AES-256 at rest

Customer-managed keys enabled with Bring Your Own Key (BYOK) and Tri-Secret Secure for full control

Hierarchical key management with automatic key rotation every 30 days

Root keys protected by Hardware Security Modules (HSMs)

Optional periodic data re-keying to support compliance or internal policy requirements

Compliance and certifications

Snowflake maintains a broad compliance portfolio, enabling customers to confidently address regulatory obligations across sectors and geographies.

SOC 1/2 Type II, HIPAA/HITRUST, PCI-DSS, ISO 27001/27017/27018/9001

Government standards: FedRAMP (Moderate/High), CJIS, DoD IL4/IL5, IRS Publication 1075, ITAR, GovRAMP

Regional requirements: IRAP (Australia), C5/TISAX (Germany), K-FSI (Korea), CE+ (UK)

Data residency and sovereignty controls with geographic data placement options

Network security and zero trust

Design for zero implicit trust, validating that every connection, identity and network path is explicitly authorized.

Private connectivity using AWS PrivateLink, Azure Private Link or Google Private Service Connect

VPC/VNet deployment options for full network isolation

Network policies, rules and private endpoints to control exposure to public internet traffic

IP allow/deny lists and session policies to help enforce least-privilege connectivity

Authentication and access control

Protect access to Snowflake through modern identity standards and fine-grained authorization models.

Multi-SAML IdP federation with adaptive authentication policies

Role-based access control (RBAC) using database roles and role hierarchies

Identifier-first authentication for a simplified user experience

OAuth 2.0 and key-pair authentication for secure API and automation access

Row access, masking and projection policies for contextual data privacy enforcement

Advanced privacy controls

Enable privacy-preserving collaboration and responsible AI adoption through native data-centric privacy mechanisms.

Differential Privacy for statistical privacy protection

Data Clean Rooms for governed multiparty collaboration

Aggregation and projection policies to enforce controlled data exposure

Universal Search with LLM-powered discovery and data classification with Snowflake Horizon Catalog

Enhanced monitoring and governance

Extend visibility beyond compliance into real-time risk detection, lineage and data quality assurance.

Snowflake Trust Center for centralized security monitoring and risk posture

Data quality monitoring with automated anomaly alerts and remediation metrics

Enhanced data lineage UI with visual impact analysis for governance and audit readiness

CIS Benchmark verification through programmatic compliance checks

Business continuity and resilience

Security without resilience is incomplete. Snowflake embeds continuity and recovery into the platform fabric.

Cross-region replication and failover capabilities

Account-level replication and automated disaster recovery

Time Travel (up to 90 days) and fail-safe (7 days) for historical recovery

Zero-downtime maintenance and transparent platform updates

Snowflake differentiators

End-to-end encryption and key management: Protect data at rest and in transit using customer-controlled key hierarchies through Bring Your Own Key (BYOK) and Tri-Secret Secure.

Unified governance model: Enable consistent security, access and compliance across multicloud deployments and data collaboration environments.

Private connectivity options: Secure hybrid and cross-cloud access via AWS PrivateLink, Azure Private Link and Google Private Service Connect, eliminating exposure to the public internet.

Active metadata and lineage management: Gain visibility into data flow and dependencies using Horizon Catalog and Universal Search for automated data discovery and classification.

Privacy-preserving analytics: Enable safe, governed collaboration using Data Clean Rooms and upcoming Differential Privacy capabilities to protect sensitive data during multiparty analytics.

Outcome: A unified security and governance foundation that helps safeguard data through every lifecycle phase, protecting privacy, enabling compliance, maintaining availability and enabling trust at enterprise scale. With Snowflake’s built-in security architecture, customers can achieve and maintain continuous protection, continuous compliance and continuous innovation.

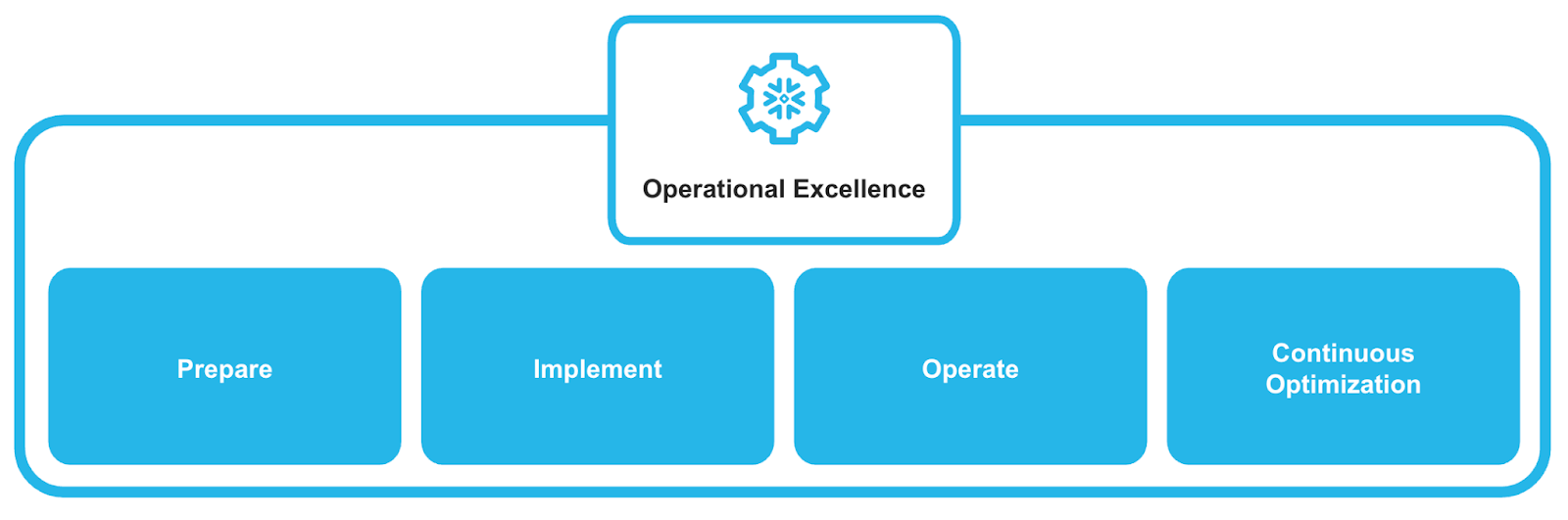

2. Operational excellence: Run intelligently

Operational excellence focuses on running and monitoring systems to deliver business value while continuously improving processes.

Architectural imperatives

Ensure operational readiness: Define SLOs, SLIs and SLA thresholds before deployment. Embed load-testing and validation in CI/CD workflows to help customers ensure predictable performance under scale.

Automate infrastructure and maintenance: Codify environments with infrastructure-as-code and GitOps. Use Snowflake Tasks and Streams or Dynamic Tables to orchestrate pipeline operations and automate recovery.

Proactive monitoring and alerting: Move from reactive troubleshooting to predictive awareness. Implement custom alerting frameworks and leverage Snowflake Alerts for key operational metrics such as query performance, warehouse utilization and latency. Use ACCOUNT_USAGE views and Event Tables to track cost, performance and access patterns; apply Snowpark for automated anomaly detection. Extend monitoring beyond Snowsight dashboards with tailored system-health views that consolidate metrics across accounts, regions and clouds.

Operational processes: Standardize operational disciplines for change management, incident response and knowledge retention. Establish deployment pipelines with rollback strategies, maintain operational runbooks and conduct post-incident reviews. Invest in team training and certification programs to ensure operational readiness and shared accountability.

Enhance observability and issue resolution: Aggregate telemetry across queries, tasks and warehouses. Correlate logs, traces and metrics to identify root causes faster and prevent recurrence. Integrate Snowflake data with external observability tools through External Functions and native APIs for unified visibility.

Enable collaboration and sharing: Leverage Data Clean Rooms and Snowflake Native Apps for governed, multiparty collaboration while maintaining operational control.

Embed AI/ML lifecycle governance: Use Snowpark Container Services and Model Registry to manage model versioning, deployment and monitoring under consistent governance.

Snowflake differentiators

Snowflake Alerts: Native condition-based notifications for real-time operational awareness

ACCOUNT_USAGEviews & event tables: Comprehensive telemetry for cost, performance and access monitoringResource monitors: Automated consumption guardrails and budget protection

Private connectivity options: Secure networking and platform isolation

Cross-cloud replication and failover groups: Operational continuity across clouds and regions

Data Clean Rooms: Secure collaboration for shared operations under governance

- External Functions: Seamless integrations with third-party systems for automation and incident management

Outcome: A predictable and measurable operational environment that delivers consistent platform performance, enables early detection of incidents and performance drift, and reduces mean time to resolution (MTTR) through automation and intelligent response.

Standardized processes, trained teams and continuous improvement cycles powered by native telemetry and AI-assisted insights ensure scalable, self-healing operations across the entire Snowflake ecosystem.

3. Reliability: Design for continuity and consistency

Reliability empowers Snowflake workloads to perform predictably, recover seamlessly and maintain trust in the data powering business decisions.

Core principles

Trustworthy data: Reliability begins with correctness. Validate data lineage, enforce schema integrity and use data contracts to prevent drift across environments.

Anticipate failure: Design architectures that assume transient errors and dependency outages will occur. Use cross-region replication, failover groups and immutable snapshots for rapid restoration.

Automate recovery: Leverage Streams, Tasks and Dynamic Tables for replay and self-healing pipelines. Automate validation and rollback to minimize recovery time.

Continuously validate: Perform regular DR and BCP simulations to ensure business continuity under stress. Version your configurations and policies to maintain consistency during rollback events.

Define shared responsibility: Understand which aspects of resilience Snowflake guarantees (platform availability, durability, encryption) and which remain within the customer’s domain (processes, pipelines, access).

Performance reliability: Design for consistent query performance under varying loads.

Manage concurrency with multicluster warehouses.

Apply auto-scaling and auto-suspension to maintain elasticity.

Optimize caching and micropartitioning for steady response times.

Monitor throughput and latency to sustain predictable performance SLAs.

Application-level reliability: Extend resilience to client applications and integrations.

Implement retry and backoff logic in API and JDBC/ODBC connections.

Adopt idempotent request patterns for reliable automation.

Manage external dependencies to prevent cascading failures.

Employ graceful degradation strategies so critical operations remain available even during partial outages.

Snowflake differentiators

Snowflake Trail observability: End-to-end visibility into replication health, pipeline reliability and operational events.

Comprehensive pipeline and application monitoring: Using

ACCOUNT_USAGE, event tables and alerts for real-time diagnostics.Automated scaling for reliability and performance: Dynamic workload scaling with multicluster and serverless compute.

Resource monitors: Proactive cost and compute guardrails to prevent resource exhaustion.

Account usage views: Deep operational insights into query health, latency and error patterns.

Cross-cloud replication and failover groups: Enterprise-grade continuity features across clouds and regions.

Error notifications: Native alerting for replication lag, task failures and operational anomalies.

Outcome: A resilient, self-healing architecture that not only anticipates and recovers from failures by design, but also maintains optimal performance, promotes data quality and provides comprehensive observability across all operational dimensions.

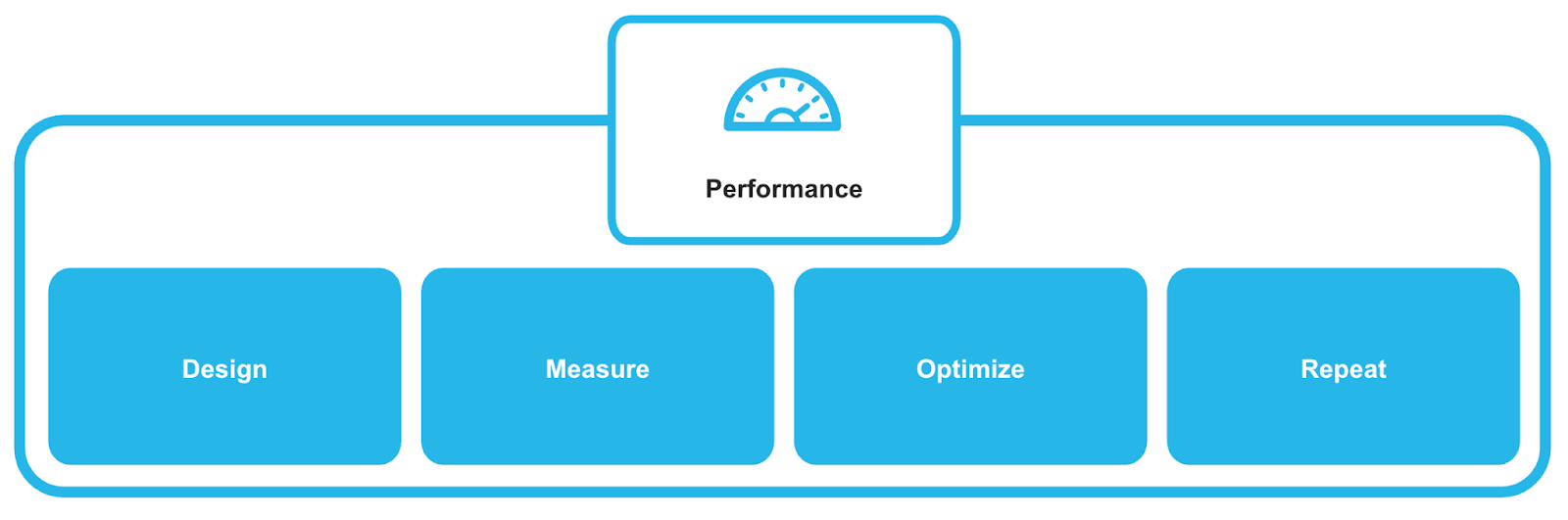

4. Performance optimization: Deliver at scale

Performance optimization in the Snowflake AI Data Cloud is about designing and maintaining predictable, efficient and scalable workloads that deliver consistent results regardless of data size or concurrency. It enables every query, pipeline and AI application to perform at its best, using the right compute, the right data structures and the right tuning patterns.

Core architectural imperatives

Workload management and isolation

Performance begins with predictable workload behavior. Separate, prioritize and scale workloads according to business criticality.

Workload segregation strategies: Dedicate warehouses for ingestion, transformation and analytics to avoid contention.

Multi-cluster warehouse configuration: Use multicluster or serverless scaling to handle spikes automatically.

Query prioritization: Apply warehouse-level queuing and resource limits to ensure SLAs for mission-critical workloads.

Session and connection management: Use session policies and connection pooling to sustain concurrency without saturation.

Advanced query optimization

Fine-tune how queries execute, cache and reuse results to minimize latency and cost.

Query profiling and analysis: Use Query Profile, Query History and Snowsight Insights to identify bottlenecks.

Memory and compute management: Right-size warehouses and optimize join patterns for efficient utilization.

Result set caching and reuse: Leverage automatic result caching, materialized views and Search Optimization for repeat queries.

SQL best practices: Adopt query simplification, predicate pushdown and micropartition pruning for optimal plan generation.

Data architecture optimization

Architect your data for compute efficiency and analytical flexibility.

Table design considerations: Apply clustering keys, partitioning and compression strategies that match access patterns.

Semistructured data optimization: Prune

VARIANTcolumns efficiently usingFLATTEN, path filters and materialized projections.External table performance: Tune Iceberg, Parquet and Delta reads with partition pruning and metadata caching.

Advanced performance features

Leverage Snowflake’s intelligent services and emerging features for sustained, autonomous performance.

Query acceleration service (QAS): Offload compute-intensive portions of queries to ephemeral accelerator clusters.

Hybrid Tables optimization: Combine transactional and analytical performance in mixed workloads.

Data sharing performance: Optimize cross-region and cross-account sharing with result-cache propagation and replication design.

Snowflake differentiators

Elastic multicluster warehouses: Automatic scaling for concurrency and throughput.

Search optimization service: Accelerates point-lookups and selective scans.

QAS: On-demand compute for complex queries.

Materialized views and Hybrid Tables: Balance latency and analytical depth.

Result caching and micropartition pruning: Reduce redundant scans and I/O.

Query profile and insights in Snowsight: Visual diagnostics for performance tuning.

Dynamic scaling policies: Enable predictable performance with cost control.

Outcome: A continuously optimized data environment that adapts dynamically to workload changes, sustains predictable performance under scale and optimizes the efficiency of every Snowflake credit consumed.

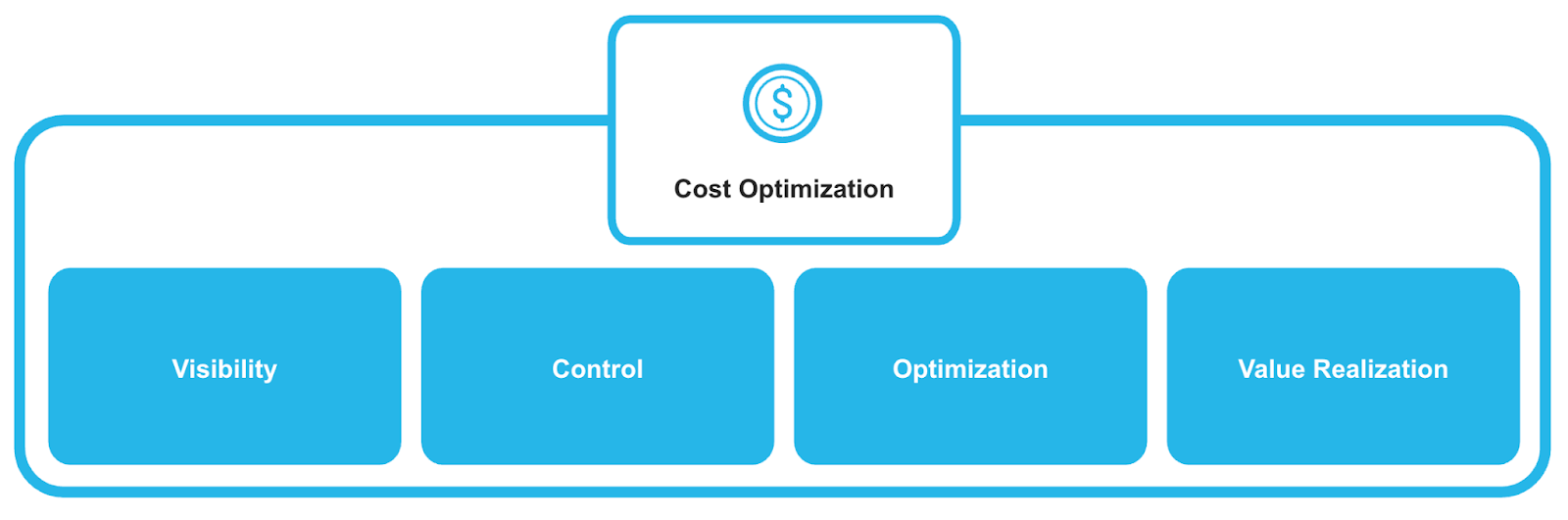

5. Cost optimization: Spend with purpose

Cost optimization helps ensure that every credit spent in the Snowflake AI Data Cloud delivers measurable business value. It’s about aligning financial accountability with technical excellence — balancing performance, scale and efficiency through automation, observability and governance.

Core architectural imperatives

Warehouse optimization best practices

Right-size, automate and isolate compute resources to optimize efficiency without compromising performance.

Auto-suspend/auto-resume: Eliminate idle spend with automatic pause/resume.

Right-sizing strategies: Match warehouse size and cluster count to concurrency and workload demand.

Multicluster optimization: Enable scale-out elasticity for high-concurrency workloads only when needed.

Per-second billing optimization: Leverage per-second metering (one-minute minimum) for granular control over compute spend.

Query performance and cost controls

Optimize every query for both speed and spend through intelligent caching, acceleration and policy controls.

Statement timeout controls: Prevent runaway costs by enforcing max execution times.

QAS: Dynamically offload compute-intensive operations to ephemeral accelerator clusters.

Result caching optimization: Leverage result and metadata caching to eliminate redundant work.

Query queue management: Control concurrency and warehouse utilization through queue thresholds and prioritization.

Data lifecycle management

Control storage costs by actively managing data retention, recovery and archival strategies.

Table type optimization: Use transient and temporary tables for noncritical or short-lived data.

Time Travel and fail-safe management: Tune retention periods to balance recovery needs and cost.

Data retention policies: Enforce organization-wide retention SLAs and purging automation.

Unused data identification: Use metadata queries and tagging to locate and retire cold or redundant data sets.

Storage optimization strategies

Design data structures for optimal storage consumption and retrieval efficiency.

Clustering optimization: Recluster only when cost-effective based on query pruning benefits.

Materialized view governance: Automate refresh frequency based on usage and dependency analysis.

Search optimization management: Enable selectively for performance-critical tables.

Data compression and organization: Rely on Snowflake’s automatic compression while auditing clustering health.

Advanced cost insights and automation

Leverage native insights, AI recommendations and automation to drive continuous optimization.

Cost insights and recommendations: Use Snowsight FinOps dashboards and optimization suggestions.

Automated cost anomaly detection: Employ Event Tables and Alerts to detect and flag abnormal spend.

Workload-specific optimization: Tune warehouses and caching strategies per workload type.

Performance vs. cost trade-off analysis: Apply telemetry data to evaluate efficiency across workloads and teams.

Snowflake differentiators

Compute and scaling differentiators

Per-second billing (one-minute minimum)

Instant elastic scaling with auto-suspend/resume

Multicluster auto-scaling for concurrency bursts

Workload isolation for precise cost attribution

Architecture and performance differentiators

True compute-storage separation

Result and warehouse caching for cost-efficient query execution

QAS for just-in-time compute

Zero-copy cloning for instant, storage-efficient environments

Highly compressed columnar storage

Data lifecycle and storage optimization

Flexible Time Travel retention configuration

Transient and temporary tables for lifecycle efficiency

Automated lifecycle management and archival

Advanced cost management

Cost insights and recommendations in Snowsight

Egress cost optimizer for cross-region data transfers

Cross-cloud data sharing with no data duplication

Snowflake Performance Index (SPI) for workload benchmarking

Operational efficiency

Near-zero administration and automatic maintenance

No downtime or patching windows

Instant creation of test/dev environments via zero-copy cloning

Outcome: A financially responsible, self-optimizing data platform where every credit drives measurable business value through automated governance controls, intelligent resource allocation and continuous cost-performance optimization.

Getting started with the Snowflake WAF

Assess current architecture against WAF principles using Snowflake documentation.

Align stakeholders across security, operations, data engineering and finance to define priorities.

Implement Snowflake-native controls, patterns and best practices per pillar.

Evolve continuously as new Snowflake capabilities (e.g., Cortex AI governance, Horizon Catalog) become available.

Conclusion: Architect once. Excel everywhere. Evolve always.

The Snowflake Well-Architected Framework is more than a set of guidelines; it’s a platform-native approach to building secure, reliable and efficient data systems. Each pillar is deeply interconnected: Robust security controls reinforce trust; operational excellence drives efficiency; reliability enables continuity; performance delivers speed; and FinOps supports sustainability.

By adopting these principles, organizations can create a foundation where data, AI and applications coexist securely and scale intelligently — all within Snowflake’s unified platform.

Learn more by visiting our website or contacting your Snowflake account team to learn more about incorporating WAF principles into your architecture.