Accelerating PyTorch Innovation at Scale: Snowflake at PyTorch Conference 2025

The 2025 PyTorch Conference gathered together thousands of researchers, developers and industry leaders from around the world to share the latest breakthroughs in AI and machine learning, spanning deep learning, systems optimizations and open source innovation.

As a Premier Member of the PyTorch Foundation, Snowflake continues to contribute to the advancement of the PyTorch ecosystem. At this year’s PyTorch conference, our AI Research and Engineering teams presented new work addressing four of the most pressing challenges in scalable AI: (1) training larger models efficiently, (2) orchestrating thousands in parallel, (3) accelerating inference, and (4) balancing multilingual performance with efficiency.

Challenge 1: Overcoming the limits of deep learning at scale

Deep learning is driving massive advancements in AI, impacting areas such as natural language processing, vision, speech and multimodal applications. However, sustaining this progress requires practical solutions for scaling up training. Developers face persistent hardware bottlenecks in memory, compute, communication and storage, which can slow innovation and increase costs.

Our solution

DeepSpeed is an open source deep learning optimization library that simplifies and accelerates distributed model training. It's designed for ease of use, delivering high performance on one to 100K GPUs out of the box, optimizing workload efficiency.

DeepSpeed enables democratization of large-scale AI by reducing the budget requirements and hardware investment needed to train state-of-the-art models. It achieves this by offering a suite of system optimizations to enable efficient large-scale deep learning, specifically improving memory, compute, communication and I/O, allowing developers to train models that are 10x larger and faster, without linear cost increases. (ref: this paper, ZeRO-Offload paper, ZeRO-infinity paper)

Tunji Ruwase, Co-Founder and Lead of DeepSpeed and Principal Software Engineer at Snowflake, emphasized that scaling does not mean simply increasing GPU capacity; it’s about smarter systems engineering.

At Snowflake, we’ve extended these capabilities through our ArcticTraining framework, built directly on DeepSpeed, to simplify and accelerate post-training for Snowflake Cortex AI and enterprise AI workloads. This collaboration embodies our shared goal: making state-of-the-art AI training scalable, efficient and accessible to every organization.

Resources:

- Watch the session: “DeepSpeed: Efficient Training Scalability for Deep Learning” by Tunji Ruwase, Co-Founder of DeepSpeed and Principal Software Engineer

- Get the slides

Challenge 2: Scaling model training across thousands of data sets and transformers

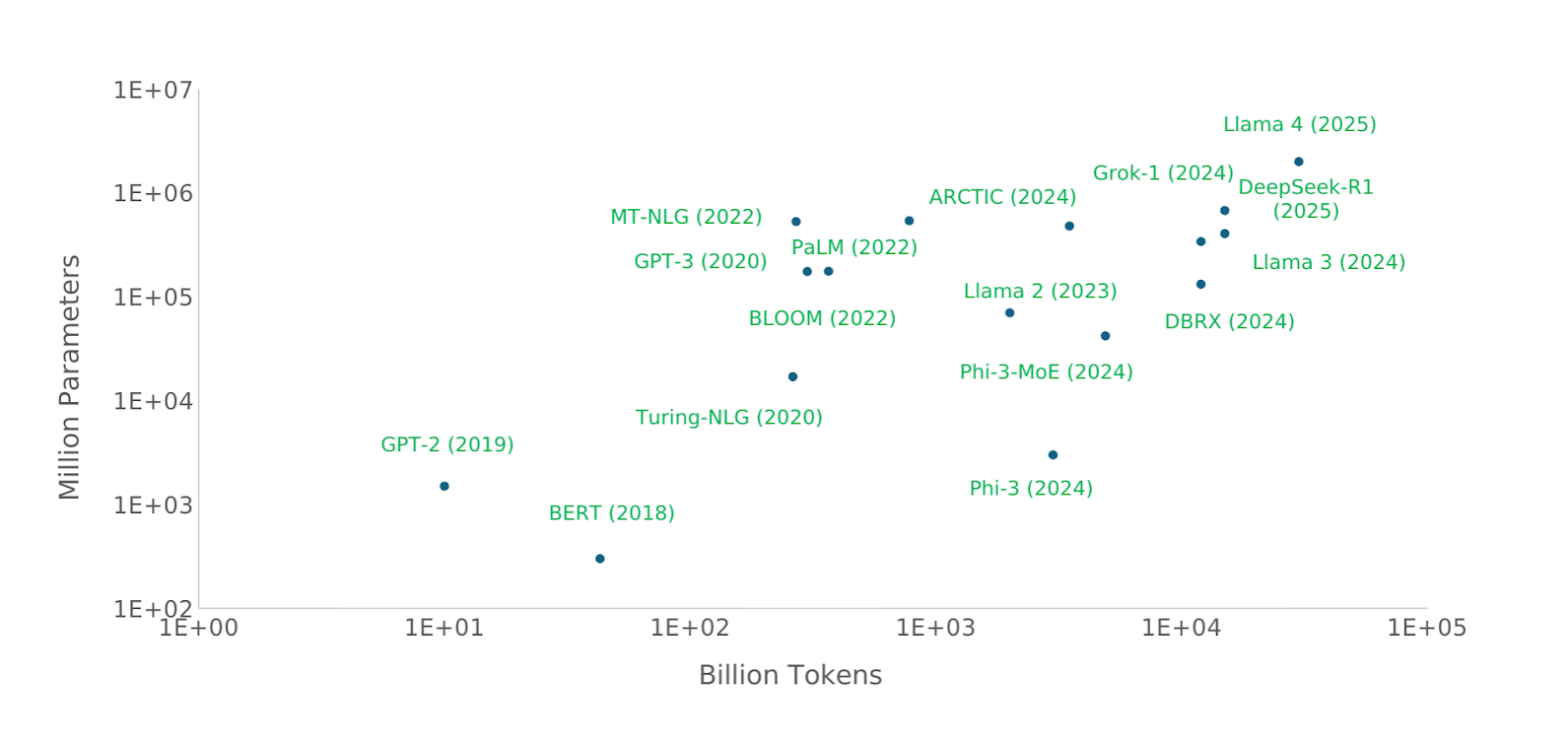

Transformer models are becoming increasingly vital for modern time-series forecasting, particularly in retail where data sets are often segmented across products.

This requires the creation of hundreds, if not thousands, of individual time-series models for each segment at the same time. The training of these sophisticated models across these partitions involves large data sets, complex transforms and sophisticated orchestration, all while dealing with resource limitations. Naively, you would train thousands of PyTorch models sequentially, which results in long training times, fewer iteration cycles and inefficient use of resources.

Our solution

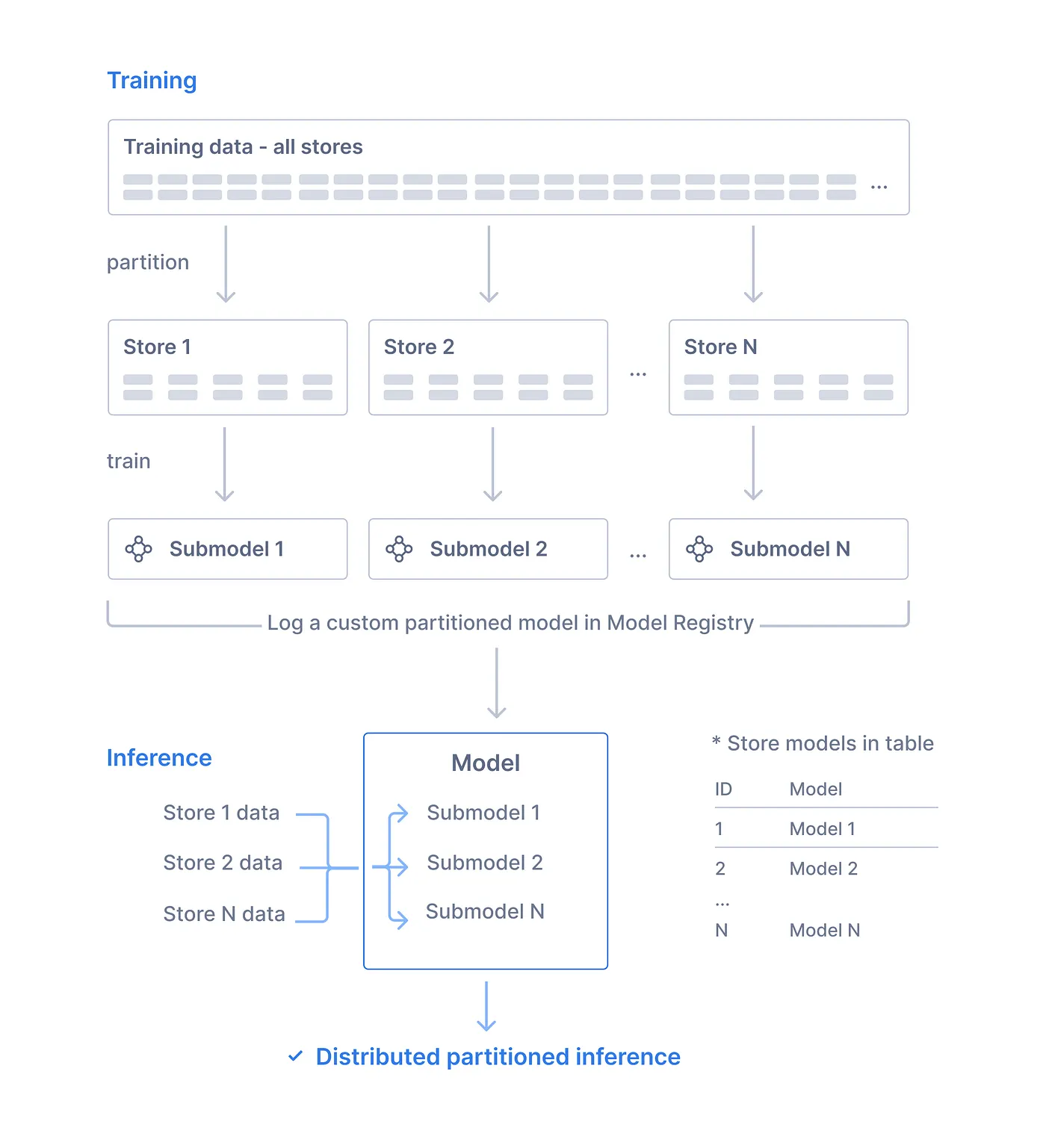

This framework simplifies the process of training and deploying large-scale models by abstracting away complex infrastructure and partitioning challenges. Our solution showcases the efficient training of thousands of PyTorch models in parallel by leveraging Ray's advanced scheduling and distributed processing capabilities, ensuring optimal resource utilization.

Snowflake ML Container Runtime provides a preconfigured Python environment, powered by Ray, designed for ML development that excels at ingesting data on a large scale and allows developers to leverage open source Python libraries for data preparation, feature engineering, modeling and more. For large-scale training, the ML Container Runtime enhances Ray to integrate efficiently with Snowflake data and facilitate orchestration of parallel jobs across multiple resources.

The many-model framework (see Figure 2) integrates highly parallel data loading with distributed processing, optimizing for cost-efficiency, resource utilization and execution time. This approach enables users to train larger, more complex transformer models on significantly bigger and partitioned data sets, and more frequently. The result is a higher velocity in developing and deploying advanced, ML-powered forecasting applications with superior predictive quality.

Snowflake is committed to making distributed PyTorch seamless and scalable for the enterprise. We empower users to run PyTorch models directly at scale within the Snowflake AI Data Cloud. Learn more about building large-scale ML and deep learning models in Snowflake, and get started quickly by running distributed PyTorch models with Snowflake Notebooks.

Resources:

Watch the session: “Many-model” Time Series Forecasting: Scaling PyTorch Training Across 1000s of Models by Vinay Sridhar, Senior Product Manager and Shulin Chen, Senior Software Engineer

Get the slides

Try the notebook using Snowflake's Many Model Training (MMT) API.

Challenge 3: Solving tradeoffs between speed, cost and scale in LLM serving

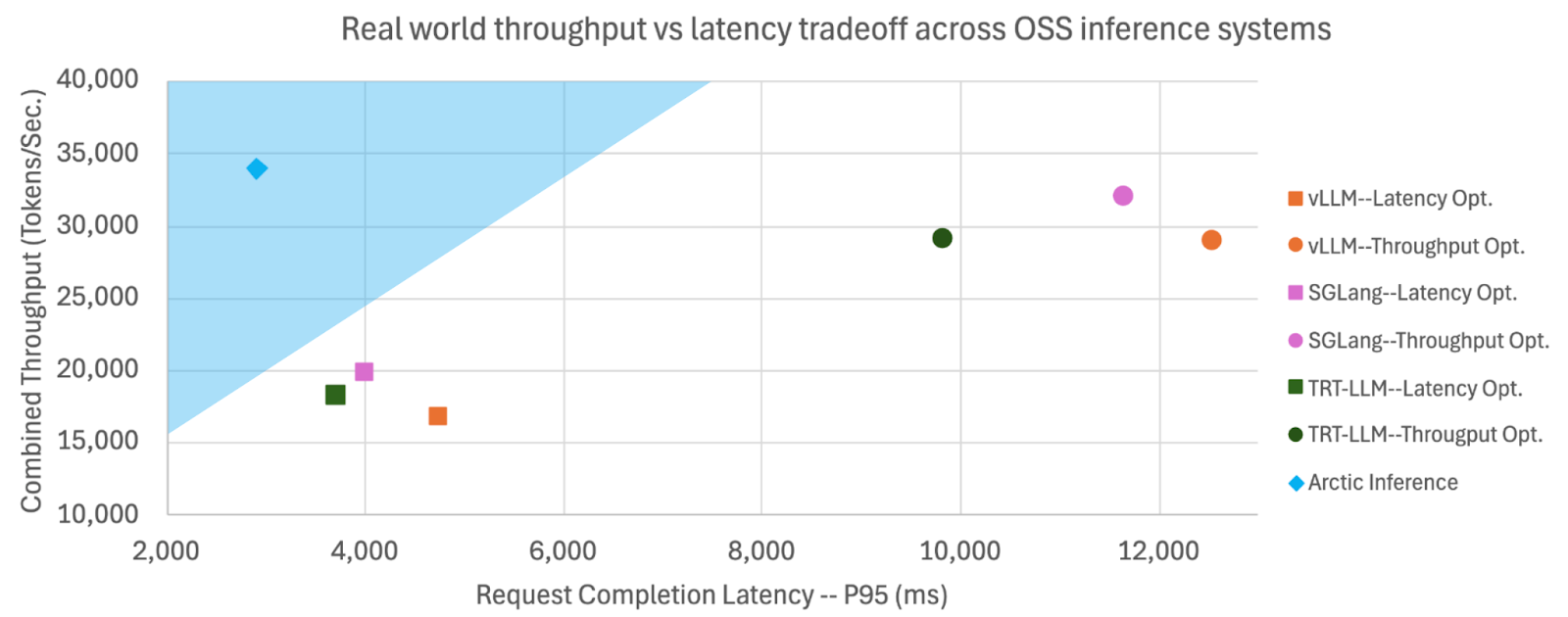

Inference is becoming the primary workload in AI, yet current systems force developers to make costly trade-offs between combined throughput, generation speed and prefill speed due to inflexible parallelisms.

Unlike training workloads, which are uniform across batches, inference workloads face dynamic, unpredictable and bursty patterns from real-world traffic. Existing parallelism strategies, such as tensor and data parallelism, were designed for homogeneous, batch-optimized training, leading to significant trade-offs in real-world inference.

Our solution

Built by Snowflake AI Research, Arctic Inference is an open source vLLM plugin that brings Snowflake’s inference innovations to the community to build, extend and use.

The plugin offers a range of advanced optimizations specifically designed to address key bottlenecks in enterprise AI workloads, from decoding and prefill inefficiencies to underoptimized embedding inference. The modular and pluggable architecture allows developers using vLLM to integrate it seamlessly without code changes, instantly leveraging these performance benefits.

Compared to other baseline open source solutions, techniques such as SwiftKV, Ulysses, Shift Parallelism, Suffix Decoding and Arctic Speculator allow Arctic Inference to deliver up to:

3.4x faster time-to-first-token (TTFT)

1.7x higher throughput

1.75x faster time-per-output-token (TPOT)

This tool is designed to deliver the fastest, most cost-effective open source inference for enterprise AI.

Resources:

Watch the session: “Arctic Inference: Breaking the Speed-Cost Tradeoff in LLM Serving” by Aurick Qiao, Senior Software Engineer

Learn more on the blog and published paper

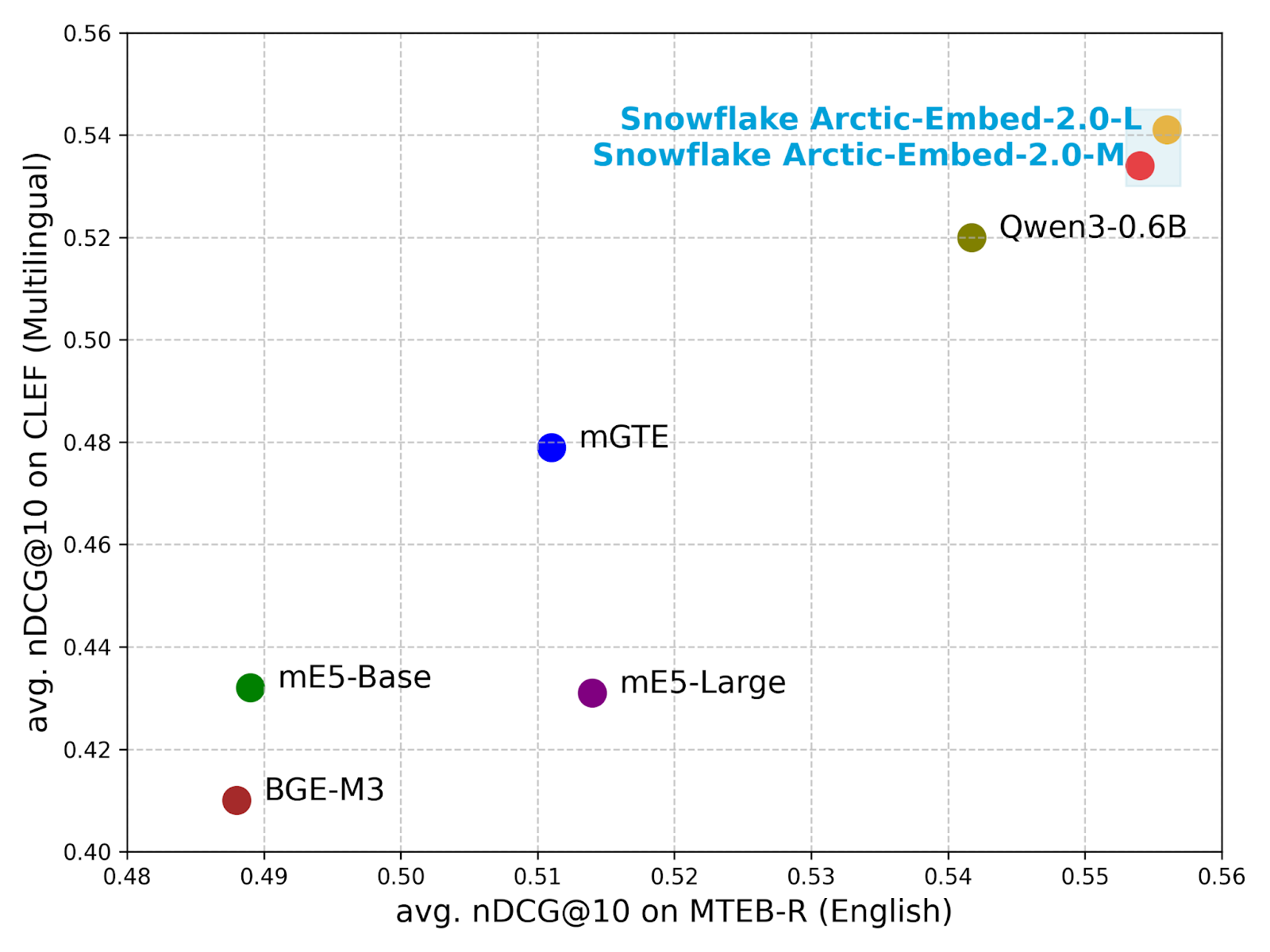

Challenge 4: Reconciling English and multilingual performance in embedding models

Achieving global information access depends on effective multilingual retrieval, but existing multilingual embeddings face a fundamental trade-off between language coverage and performance.

It is common for multilingual models to underperform their English-only counterparts on English retrieval evaluations, while English-focused models perform poorly on multilingual tasks. High retrieval effectiveness in many state-of-the-art models comes at a cost. They require a large number of parameters and generate large embedding vectors, driving up the computational and economic costs for dense retrieval when dealing with large collections of texts.

Our solution

Arctic-Embed 2.0 is a state-of-the-art collection of open source text-embedding models engineered for precise and efficient multilingual retrieval.

Unlike previous models that experienced a decline in English retrieval quality, Arctic-Embed 2.0 maintains competitive retrieval quality across both multilingual and English-only benchmarks. It introduces a new standard for multilingual embedding models, combining high-quality multilingual text retrieval without sacrificing performance in English. Additionally, it supports Matryoshka Representation Learning (MRL), which allows for efficient embedding storage with significantly less compressed quality degradation compared to other options.

This was done through a three-stage training framework: pretraining via masked language modeling, contrastive pretraining, and contrastive fine-tuning. We enabled generalizable multilingual retrieval performance by scaling to 140 million multilingual contrastive pretraining pairs. Read the blog to learn more.

With more than 4 million Hugging Face downloads, the Arctic Embed 2.0 series is a trusted and versatile solution for the global research community.

Resources:

Poster presentation at PyTorch 2025: “Training Efficient and High-Quality Text Embedding Models for Search” by Puxuan Yu, Software Engineer

Try Artic Embed or download the Hugging Face model

Read more in the published paper

Open source at scale

Snowflake is dedicated to contributing to and advancing the PyTorch ecosystem by tackling the practical challenges of deploying AI at scale. The Snowflake AI Data Cloud supports the AI & ML tools Python developers love, built on PyTorch at scale for training and inference.

Check out our open positions if you’re interested in working with us!