PLEASE NOTE: This post was originally published in 2018. It has been updated to reflect currently available products, features, and functionality.

The Snowflake Data Cloud has near instant elasticity, allowing customers to scale up and down to meet demand. Whether this demand is predictable or highly variable, the system can flex bigger or smaller to deliver results for key data workloads, while never carrying more capacity than needed. What’s more, users have an almost unlimited scale data repository that can be queried by a multitude of fully isolated compute clusters, allowing them to run concurrent workloads without negatively impacting each other, even when some of those workloads see big spikes in activity. This type of flexibility is powerful, but with this power comes risk—will spikes in demand lead to runaway spending?

Snowflake balances the power of true cloud elasticity with clear visibility into usage and spend, monitoring and sending notifications when resource usage is higher than expected, and offering strong cost controls to shut off usage when caps are hit. This post describes how customers can use these features to strike the balance they need between flexibility and control.

A simple pricing model

Snowflake’s pricing model is primarily based on two consumption-based metrics: usage of compute and of data storage.

The charge for compute is based on the number of credits used to run queries or perform a service, such as loading data with Snowpipe. Credits have a pricing rate depending on the edition used: standard, enterprise, or business-critical, each of which comes with a different list of features. Compute cluster usage time or serverless operations have a rate of credits consumed, and all compute charges are billed based on real, rather than predicted or estimated, usage—down to the second.

The charge for storage is based on the number of bytes stored per month, as well as the cost of moving data across regions or clouds that is passed on by the cloud vendor. Storage costs benefit from automatic compression of all data stored, and the total compressed file size is used to calculate the storage bill for an account.

All charges are usage-based

For example, in the United States, Snowflake storage costs begin at a flat rate of $23 USD per compressed TB of data stored, averaged across the days in a month. Compute costs are $0.00056 per second for each credit consumed on Snowflake Standard Edition, while the Business Critical Edition (which includes customer-managed encryption keys and other security-hardened features and enables HIPAA and PCI compliance) costs $0.0011 per second for each credit consumed.

Clear and straightforward compute sizing

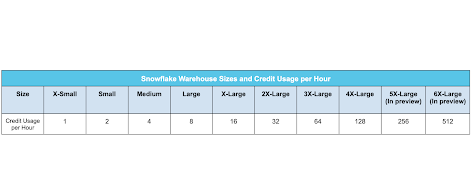

Snowflake users can enable any number of “virtual warehouses,” which are logical groupings of compute resources that power query execution. Virtual data warehouses are available in 10 “T-shirt” sizes: X-Small, Small, Medium, Large, and X-Large to 6X-Large (5XL and 6XL are in preview). Each data warehouse size has a number of compute credits that are consumed per second of usage. As you double the compute capacity of your virtual warehouse by going up a size, credit usage doubles as well. Performance on Snowflake is linear, where doubling the size of a warehouse typically halves the amount of time it takes to do an operation, resulting in the same cost.

No charge for idle compute time

Snowflake offers customers true resource elasticity. With our consumption-based pricing model, you’re billed for usage by the second, with near-instant auto-stop and near-instant auto-resume that help you prevent paying for resources you don’t need. Each workload can have its own set of compute resources that enable independent scaling without impacting other jobs. Meanwhile, all these clusters can see all the data, preventing silos from hindering data usability.

All queries run automatically on the virtual warehouse from which a query is launched. One huge benefit of Snowflake is that it allows you to suspend a particular virtual warehouse if no queries are actively running, either manually or automatically, with user-defined rules (e.g., “suspend after 2 minutes of inactivity”). Once suspended, charges are also suspended for idle compute time.

Because operations including suspend, resume, increase, and decrease are essentially instantaneous, customers can precisely match their spending to their actual usage, without carrying headroom for unexpected usage or worrying about capacity planning at all.

When you terminate services with other data platform solutions to stop being billed, the data is automatically deleted from the data warehouse upon termination. If you later need to run queries against that data, you have to reprovision the warehouse and reload the data again, eating up time and money. Because of this inefficiency, data platforms with this design are often left on and active, 24×7, with the meter running up charges, whether you are running queries or not.

In Snowflake, when you process a new query on a paused virtual warehouse, the platform automatically restarts that cluster, with no delay or manual reprovisioning. This allows capacity to be available when needed, with no need to pay for it when it’s not.

Flexibility and predictability

When you run queries in Snowflake, there’s no need for capacity forecasting, and there are no fixed usage quotas or hidden price premiums. You pay only for what you use, and the data cloud automatically increases or decreases capacity in near-real-time to meet demand as it happens.

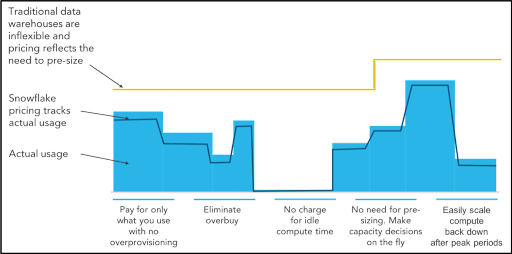

On-premises data platforms, as well as cloud data platforms with pre-cloud architectures, make it nearly impossible to match capacity with demand in real time. When the system can’t respond quickly enough for just-in-time provisioning or pricing models are too rigid, you’re left with high costs, limited performance, or both.

High cost comes when users carry more capacity than they actually need, as a buffer to account for unexpected demand. Low performance comes when the system isn’t nimble enough to expand when more demand arrives. Snowflake’s near-instant elasticity of resources that can be added or removed manually, on a schedule, or by using auto-scaling eliminates these issues and allows customers to have enough capacity when they need it, without having to over-provision resources.

Some cloud data platforms charge based on how many terabytes are scanned or how many terabytes are returned from a query, rather than based on the time that queries are running. While this is flexible, it’s problematic because users or applications can’t predict how much data would be scanned or returned by a given query, which results in highly variable and unpredictable pricing each month.

Cost governance and control

What if customers are concerned that the high level of elasticity that comes with Snowflake will allow users to blow through the budget too quickly? Some customers prioritize agility over all else and are happy that data engineers and analysts can do everything they can imagine with their data.

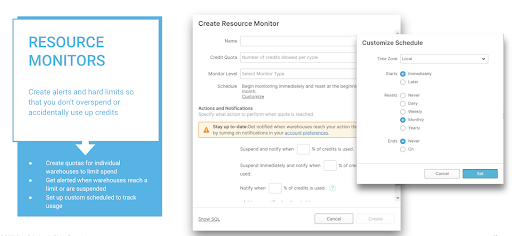

For organizations that want to control spending, Snowflake gives you a robust toolset for cost governance and control. Users get full visibility into historical and predicted usage patterns and can set alerts or hard limits on consumption at a granular level. Limits can be set for daily, weekly, monthly, or yearly consumption limits. At the discretion of each company, those limits can be informational notifications or hard caps that prevent further usage when quotas are reached. Limits can be defined at the virtual warehouse level, so different departments and workloads with varying levels of criticality can set limits that make sense for their specific use cases.

Another cost governance feature, Object Tagging, makes it easy to categorize different objects and group spending by tags.

Keeping it simple, cost-effective, and highly performant

Unlike traditional on-premises data warehouse technology or cloud-based data warehouses that weren’t designed to take advantage of cloud infrastructure elasticity, Snowflake’s Data Cloud makes working with data and budgets easy. Snowflake makes it cost-effective to get as much insight as possible from your data, with far less administration effort than with traditional data warehouse solutions. By avoiding the headaches of traditional solutions, Snowflake enables you to focus on strategic data analytics and engineering projects that advance your business—without breaking the bank.